There are so many interesting and exciting tasks. When developers get such tasks; they usually try to prepare for any surprises and difficulties. In such cases, we work more on planning and add extra time to reduce risk of missing deadlines.

Problem statement

I faced a task that at first glance seemed like a trivial routine. One of our projects is related to work on solidity. The team makes black voodoo magic works on a solidity byte-code analyzer which should look for vulnerabilities. And in order to show the user where exactly in his code the vulnerability is, among other things, the sourcemappinigs are used. One of the subtasks is to parse these sourcemappings strings.

In short, it looks like this: the string in this format represents the list of nodes separated by the semicolon symbol (‘;’). The node consists of 5 elements (s:l:f:j:m) separated by the colon symbol (‘:’). More details about node’s fields and their possible values could be found in documentation. This is an example string: “1:2:1;1:9:1;2:1:2;2:1:2;2:1:2”.

These strings are not as simple as they look. To compress these source mappings especially for bytecode, the following rules are used:

- If a field is empty, the value of the preceding element is used.

- If a ‘:’ is missing, all following fields are considered empty.

So the implementation of these 2 rules leads to the transformation:

“1:2:1;:9;2:1:2;;” => “1:2:1;1:9:1;2:1:2;2:1:2;2:1:2”

Actually, the task is elementary. As input, the function receives a compressed string and index. The result of this function is the decompressed node by this index. EZ! The only trick is that input strings can be very large (84 610 000 symbols in our tests).

The first implementation worked ~40 seconds. Unfortunately, its sources are missing. The main idea of that algorithm was wrong: the input string had been splitted by a semicolon symbol, then all nodes were parsed one by one from the beginning of the collection. Due to the slow work of the first solution, there was a need for optimization.

Solution one

Firstly, I removed all unnecessary operations. We do not need a collection of nodes, we only look for just one specific node. So I will walk through the input string from the beginning and search for the symbol ‘;’. Then I will form a substring and fill the current node with values from this substring. Repeat until the current index is not equal to the destination index (or the end of the input string).

The first solution looks like this:

It is not the most accurate method to measure functions performance; but it is suitable for this case. We don't need a fight for microseconds, right?

In case somebody needs to profile Python scripts, here is my example code snippet:

# place your filename instead of sourcemappings.py python -m cProfile -o out.prof sourcemappings.py && snakeviz out.prof

As result of this snippet we get the following beautiful web page:

The execution of the first version of the algorithm took almost 9 seconds, which is not bad:

node_at took: 8.769877910614014

Compared to the previous version - 4 times faster. Excellent - I would say.

In theory, here it was necessary to calm down and go do something useful. But I was wondering if there is another, more effective solution for this task. For example, new string and text new instructions were introduced in SSE4.2. Maybe they can be helpful here? Or perhaps it's all about some internal mechanisms of Python?

Solution two

So, I decided to continue my research. To begin with, I implemented almost the same thing in golang. Here is the source code:

What's interesting is that at first I did it without the switch, it was simple and clean. But it worked a little slower. The difference is about 0.1-0.2 seconds on average, but still… The execution of this version takes 0.88 seconds on average (on the same input data, of course). It is ten times faster than my fastest Python solution!

I offered the team to connect the existing Python solution with this golang based daemon. We could send an input string and destination index via redis, socket, pipe, etc. We can even create a file with content in /dev/shm/. I thought that in all listed cases, the overhead should be less than the profit. But this solution was denied. After all, too much time was spent on sending the request and getting the result back. Unfortunately, there are no profiling results for this solution. I took the word of the team that is involved in the project.

Then I decided to write a C library, because it can be easily used in Python applications. This is the result of that effort:

Here are more lines of source code because there are two different functions. One of them can not work with read-only strings, rather modifies the input using the strsep function. But on the other hand, it is implemented in 6-7 lines of code, quite concisely and efficiently.

static node_t node_at_erasing(char *str, int ix) {

node_t res = {0};

char *tok_node = NULL;

char *tok_part = NULL;

int nc = -1;

char **dst[5] = {&res.s, &res.l, &res.f, &res.j, &res.m};

for (; (tok_node = strsep(&str, ";")) != NULL && nc < ix ; ++nc) {

char ***p_dst = dst;

for (; (tok_part = strsep(&tok_node, ":")) != NULL; ++p_dst) {

**p_dst = (*tok_part ? tok_part : **p_dst);

}

}

return res;

}The second function does not modify the input string and works even faster than the first one. After a couple of sessions with valgrind profiler, I came up with this result: 0.1 seconds. This option completely suited me, and I calmly went to bed because I was red-eyed over it for quite some time.

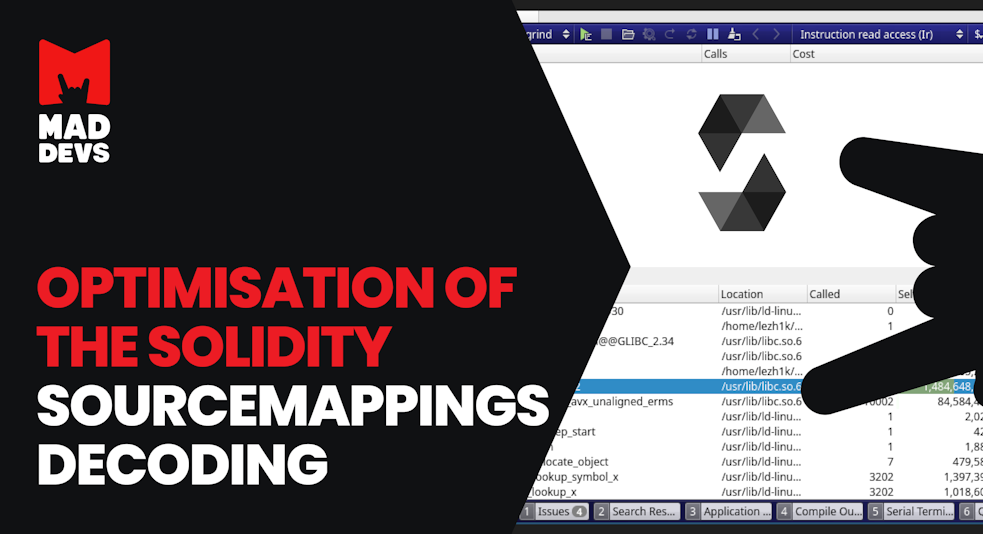

There are two screenshots below demonstrating why the C version works so fast. It uses SSE4.2 and AVX instructions for string operations:

After a couple of days of testing by the team, I received the feedback: it doesn’t work for them. It turns out that we need to put all the nodes in the collection and then process them further with some tricky algorithm.

Solution three

Well! Back to the Python solution, because why do we need all these C-bindings and ctypes? I had modified the source code a little bit and came to this solution:

Implementation did not take much time and works relatively fast: it’s about 10 seconds. Maybe it will be enough, but I have prepared a C-based solution in advance.

I had to modify the source code and the algorithm a little bit. Full source code is available here.

The main idea is still very simple:

- We do the same as during the search but simultaneously write the current node to the output collection. It means that the string processes in 2 passes.

- At first pass, we calculate how many nodes in this string by counting the semicolon symbol.

- Then we allocate enough memory and fill it with the data.

The only thing left is that we need to receive a pointer to an array of structures in Python code from the C library and then use this result. There are many different tools for this case. Experienced people recommend SWIG and CFFI. But there are just two small functions that I have to import. Why should I use such big tools for this? I chose ctypes. I wanted to use numpy arrays for the result, because of their internal representation. But I did not find how I should declare the type to use in the numpy array. If anybody knows it - please, leave a comment.

So, the library returns a pointer to an array of structures (nodes) allocated inside the library. I declared a particular function to free this memory. Let’s make a RAII wrapper around these functions to call the free procedure in the destructor. This solution is good enough, but it also has its own cons:

- Intellisense does not work in VSCode. The list of fields of the node object does not appear, and I have to look at the declaration.

- It is necessary to build the library before use.

The result is:

C took: 0.5122084617614746 last_node: b'9359':b'71':b'-1':b'o':b'8', nodes_count: 26830000 python took: 10.238852500915527 last_node: b'9359':b'71':b'-1':b'o':b'8', nodes_count: 26830000

Bottom line

I can assume that there is a possibility to increase the speed even more tangibly. There are even thoughts that SSO (small string optimization) may allow to reduce the number of memory copies. But we will leave that for future tasks or new material.

Actually, there are no new conclusions. It is simply that one can effortlessly link Python with efficient C libraries and not bother with Python code micro-optimization when it is necessary to optimize things. A very workable option that suited our team.

Tech Stack of Prominent Companies: What Are Industry...

Tech Stack of Prominent Companies:...

Tech Stack of Prominent Companies: What Are Industry Giants Using to Power Their Applications?

Netflix vs. Hulu, Hubspot vs. Salesforce, Spotify vs. Pandora, Databrick vs. ByteDance, Canva vs. Miro, and Uber vs Lyft are the rivalries that...